Voice First: The Next Paradigm Shift is Coming

The future of interaction is here — AI agents are leading the charge. Are you ready to embrace it?"

Introduction

Remember when “Mobile First” was the guiding principle that upended the desktop era, placing smartphones at the center of our digital lives? That transformation fundamentally changed our habits, making everything from online shopping to remote work accessible from anywhere. Today, we stand on the brink of another paradigm shift: Voice First. In this new era, our voices become the primary mode of interaction with our devices, and AI agents not only execute our commands but anticipate our needs, prompting us to reimagine what’s possible in a world that’s no longer tethered to screens.

Voice-based interfaces are no longer science fiction. They’ve quietly entered our daily routines, from asking a smart speaker about the weather to dictating notes on the go. Yet we are only beginning to see the transformative potential of a future in which voice is the most natural, intuitive way to engage with technology. In this article, we’ll explore why “Voice First” matters, what defines a robust voice system, why the timing is crucial right now, the challenges on the horizon, and how you can be part of this revolution.

Why “Voice First”?

At its core, “Voice First” addresses a fundamental truth: our eyes and hands are often occupied—driving, cooking, or doing other tasks—while our voices remain free to command and inquire. Beyond convenience, Voice First is a powerful equalizer, helping users of different abilities and literacy levels interact with technology more naturally.

By enabling a frictionless flow of conversation, voice-based interactions can feel more intuitive and human-like. We already see people asking smart speakers for the weather or using voice notes to capture ideas on the go. But these are only stepping stones. As voice interactions become more sophisticated, they won’t merely respond; they’ll adapt and anticipate our needs, ushering in a new era of digital experiences.

What Is “Voice First”?

“Voice First” defines a world where we converse with digital systems much like we converse with each other. At the highest level, it entails:

Natural Language understanding

Natural language understanding is the bedrock of the “Voice First” paradigm. It enables systems to interpret spoken commands accurately, including colloquial phrases, filler words, and subtle inflections that occur in everyday conversation. This capability extends beyond mere word recognition, embracing the complexities of linguistic diversity, cultural nuances, and situational context.

For example, advanced Voice AI systems are designed to seamlessly handle code-switching, where users shift between multiple languages mid-sentence, or adapt to hyper-local accents and dialects. This adaptability makes Voice First technology indispensable in scenarios like multilingual customer support, international education, or cross-cultural telemedicine, breaking down barriers to effective communication.

Looking ahead, this level of language sophistication will redefine global interactions, particularly when integrated with real-time translation services. By enabling users to interact naturally across linguistic divides, Voice AI positions itself as a universal connector, transforming how businesses, communities, and individuals interact on a global scale.

Multimodal Interface

“Voice First” does not imply the replacement of other interaction methods; rather, it emphasizes speech as the primary input while integrating additional cues such as visuals, text, and even haptic feedback. Speaking is often the fastest way to initiate an interaction, but complex responses are better conveyed visually or textually.

Take, for instance, searching for a movie: you might say, “Show me classic science-fiction films from the 1990s.” The system can display a curated list with descriptions and ratings on a screen, allowing you to browse efficiently. Similarly, if you’re navigating a new city, a Voice First assistant could provide verbal turn-by-turn directions while simultaneously overlaying visual cues on an AR-enabled map.

In immersive environments like AR and VR, voice commands offer an unparalleled level of hands-free control. Whether you're exploring a virtual showroom, gaming, or collaborating in a digital workspace, voice-driven navigation minimizes physical barriers and maximizes fluidity. This interplay between voice and other modalities allows for seamless transitions between actions, making interactions intuitive and user-centered.

Historical & Personalized Context Awareness

Voice First systems become far more powerful when they can draw on a user’s history, preferences, and even emotions to deliver truly personalized experiences. By remembering past interactions — such as commands, preferences, or location data — and interpreting emotional cues, these systems can provide responses tailored to an individual’s mood, routine, environment, and even their current state of mind.

Imagine asking for dinner suggestions: instead of generic options, the system might prioritize a restaurant you love or suggest a dish you often order. But if it detects frustration in your voice — perhaps from a long day — it could recommend a comforting meal or a quiet venue to help you unwind. Similarly, on a stressful weekday morning, it could prioritize your commute or meetings while offering calming music if it senses stress. On a relaxed weekend evening, it might suggest entertainment, picking up on your excitement to propose something adventurous. These contextual cues — time, weather, activity, or tone — combined with an understanding of your feelings, create seamless, empathetic interactions that feel deeply human and supportive.

Over time, this ability to learn, adapt, empathize creates an assistant that feels genuinely attuned to your lifestyle and emotional needs, fostering a deeper sense of trust and reliability. By blending objective context with emotional awareness, Voice First systems could truly become partners in your daily life, not just tools.

Proactive Intelligence

A key step forward for Voice First systems is moving from reactive assistance to proactive partners, capable of anticipating user needs and offering timely, relevant suggestions. Rather than waiting for explicit commands, these systems analyze behavioral patterns, contextual data, and environmental inputs to deliver meaningful assistance at just the right moment.

For instance, a proactive assistant might notify you to leave for an appointment based on current traffic conditions, or suggest rescheduling if a conflict arises with another event in your calendar. Similarly, it could recommend a weather-appropriate outfit as you prepare for the day or prompt you to restock frequently purchased groceries before you run out.

By transitioning from passive responders to proactive contributors, these systems forge a deeper connection with users. They elevate the relationship from one of simple utility to true partnership, offering insights and taking actions that align with your routines and preferences—even before you ask.

Autonomous Task Execution and Results Delivery

The ultimate vision for Voice First is achieving full autonomy, where AI systems handle complex tasks end-to-end without requiring step-by-step guidance. This marks a fundamental shift from assistants that respond to individual queries to agents capable of handling intricate, multi-step processes seamlessly and intelligently.

Consider scheduling a meeting: today, this often involves specifying times, coordinating availability, and confirming arrangements. In a Voice First ecosystem, an autonomous AI agent could independently manage the entire process—checking calendars, resolving conflicts, notifying participants, and finalizing details—all with minimal input from you.

This autonomy is amplified when paired with the concept of ambient computing environments, enabled by wearable technology such as smart headphones, AR glasses, or even connected clothing. In such settings, AI assistance is always accessible, activated with a simple spoken prompt, and capable of responding contextually, anytime and anywhere. For example, during your commute, your assistant could autonomously coordinate a meeting by processing real-time traffic data to adjust your schedule, all while keeping you informed through discreet audio updates in your smart earbuds.

Beyond individual tasks, this level of ambient and autonomous operation extends to managing interconnected systems. Imagine requesting, “Plan a weekend getaway,” and the assistant seamlessly handles everything: booking flights, selecting accommodations, arranging car rentals, and even drafting an itinerary based on your preferences. Through wearable devices, it could dynamically provide updates, notify you of changes, or adapt to your feedback in real time.

This integration of autonomous execution with ambient computing transforms Voice AI from a reactive tool into a proactive, ever-present companion. By delivering results autonomously and contextually, it not only enhances productivity but also enriches everyday life, enabling users to focus on creativity, personal growth, and meaningful pursuits.

Why the Time Is Now

Recent innovations in AI, especially Large Language Models (LLMs), are rapidly transforming the vision of Voice First into reality:

Natural Language Understanding: Advanced models like OpenAI GPT, with their Advanced Voice mode capabilities, have significantly improved speech recognition, language translation, and the interpretation of nuanced language elements such as colloquialisms, accents, and code-switching. Real-time language translation has become more seamless, aiding global businesses and travelers in overcoming linguistic barriers. These systems now adeptly handle filler words, pauses, and other speech patterns, allowing for a conversation that feels more naturally human.

Multi-Modal Interfaces: Google's Gemini multi-modal models and OpenAI's Advanced Voice mode with Visual inputs are two of the leading examples that showcase the promise of a mulit-modal interaction flow, with voice commands augmented with visual feedback for a richer and intuitive user experience.

Historical & Personalized Context Awareness: Breakthroughs in Retrieval Augmented Generation (RAG) and hugely expanded LLM context windows now allow systems to store and retrieve vast amounts of personalized data. Integrations such as Apple's Siri with ChatGPT exemplify how voice assistants are evolving into more sophisticated, context-aware helpers.

Proactive Intelligence: With enriched context awareness, voice assistants are evolving to be proactive, predicting user needs and acting before explicit commands are given. Amazon Alexa's "suggested reorder" feature is a primitive example of anticipating needs based on user history. The synergy between Siri and advanced capabilities from ChatGPT is also poised to elevate voice assistants into proactive entities, providing insights and solutions aligned with user habits and preferences.

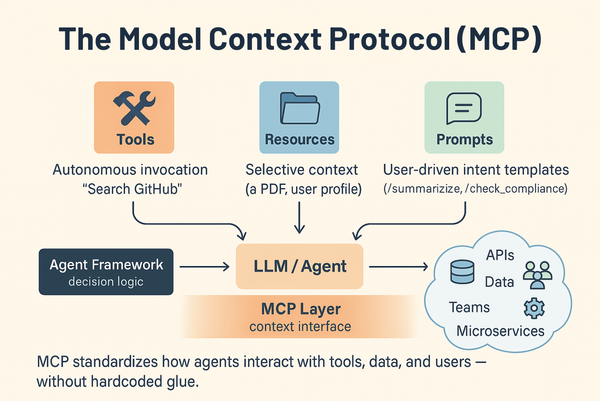

Autonomous Task Execution and Results Delivery: Emerging multi-agent systems, facilitated by frameworks like LangGraph and AutoGen, demonstrate a future where AI agents can autonomously coordinate tasks across multiple systems. This progression moves us closer to frictionless, voice-driven workflows that deliver real-world, end-to-end results.

With these technological strides, the implementation of Voice First solutions is no longer a future prospect but a present reality, offering immediate and tangible benefits to both businesses and individuals.

Navigating the Challenges

As with any technological paradigm shift, the "Voice First" approach comes with significant challenges that span both technical and social dimensions.

From a technical standpoint, many hurdles exist, for instance:

- AI's language understanding capability remains imperfect: Accents, dialects, speech impairments, and noisy environments can significantly disrupt voice recognition. This issue is rooted in the fact that language models, particularly large language models (LLMs), rely on vast but often unbalanced datasets. Achieving true universal accessibility necessitates not only ongoing training but also the inclusion of more diverse, representative data to handle the nuances of global linguistic diversity.

- Context management presents a formidable challenge: Systems must maintain a delicate balance between keeping sufficient conversation history and focusing on relevant context. The complexity arises from the need for sophisticated algorithms that can efficiently prune outdated information while accurately retrieving pertinent details. This is exacerbated by the non-deterministic nature of LLMs, where responses can vary unpredictably, making context retention and relevance even more critical to ensure coherent and helpful interactions.

- Proactive assistance is inherently complex: Even simple tasks like suggesting when to refill household items involve a web of variables including individual consumption patterns, delivery logistics, and real-time product availability. The challenge is compounded by the need to integrate these elements seamlessly into user routines without being intrusive. The non-linear and often unpredictable nature of human behavior further complicates the timing and appropriateness of these interventions.

- The orchestration of multiple AI agents carries unique risks: When multiple large language models interact, there's a potential for "cascading failures." This is due to the non-deterministic behavior of LLMs, where one model's error can trigger a chain reaction, amplifying mistakes across the system. Such scenarios can lead to confused or incorrect responses, degrading the user experience. Designing systems to mitigate these risks requires intricate coordination and error-checking mechanisms.

- Privacy and security are paramount in an always-listening environment: The continuous collection of voice data raises significant privacy concerns. This issue is deeply rooted in the need for robust encryption, transparent data policies, and security measures embedded in listening devices. The challenge is to protect user data against breaches while ensuring that the system remains user-friendly and non-intrusive, balancing security with usability.

The shift toward Voice First is not just about technology, it requires significant cultural and behavioral adaptation as well. For example, voice commands in public or social settings can feel awkward or intrusive due to cultural norms around privacy and the novelty of the interaction method. The challenge lies in normalizing voice interactions to fit seamlessly into various social contexts without disrupting the natural flow of human communication. Furthermore, users' trust in AI decision-making is pivotal; frequent errors or misinterpretations can lead to a preference for more familiar touch interfaces. Building trust requires AI systems that reliably understand and respond to the complexities of human language, including accents, slang, and context, to avoid undermining user confidence. Consequently, public education, thoughtful design, and technology maturity will play critical roles in easing the transition to predominantly voice-based interactions, making sure they are reliable, inclusive, and aligned with users’ comfort levels.

From Vision to Action: Practical Steps for Individuals, Businesses, and Developers

Bringing “Voice First” from novelty to necessity requires a concerted effort across multiple domains, with Voice AI Agents at the core of this transformation.

- For businesses owners, adopting Voice AI Agents is increasingly a strategic imperative rather than a mere curiosity. By incorporating voice-driven systems into customer service, companies can reduce wait times for basic inquiries, automatically route complex issues to human representatives, and provide a 24/7 support presence without ballooning staffing costs. Moreover, voice-optimized marketing and search strategies can capture new audiences who often speak rather than type their queries, ensuring businesses don’t miss out on emerging patterns in consumer behavior. Inventory and supply-chain management can also be streamlined by integrating Voice AI with real-time data, allowing rapid voice commands to reorder stock or schedule deliveries. Beyond these immediate gains, businesses that fail to modernize their infrastructure risk falling behind competitors who leverage voice innovation as a core driver of efficiency, customer satisfaction, and brand differentiation. Adapting content for conversational queries, building internal voice assistants to help employees with routine tasks, and experimenting with voice-enabled sales channels can all translate into long-term competitive advantage. By recognizing and investing in voice technology’s potential now, companies can position themselves at the cutting edge of a rapidly shifting digital landscape.

- For developers, this is a golden age to embark on the journey of building the next generation of Voice AI Agents—tools that will ultimately shape how we all interact with technology. Advances in natural language processing, contextual awareness, and multi-modal integration offer a rare opportunity to push the boundaries of machine intelligence and define the future of human-computer communication. By designing scalable architectures that handle diverse dialects, complex data, and real-world contexts, developers can reimagine what’s possible in everything from smart homes to enterprise-level systems. In doing so, they not only have the chance to solve existing challenges—such as multilingual support or robust privacy protections—but also to pioneer entirely new experiences that will drive how people live, work, and connect for years to come. At the same time, building user trust through transparent data policies and secure protocols ensures that innovation marches hand-in-hand with ethical responsibility.

- For all everyday users, the journey often begins with small habits—using voice to set reminders, check weather updates, or manage quick tasks. Yet the real impact becomes clear when people explore advanced Voice AI Agents capable of nuanced, back-and-forth dialogue. Tools like ChatGPT’s voice mode can track conversational context, deliver in-depth explanations, and adapt to follow-up questions. These richer, more intuitive exchanges frequently evolve voice from a sporadic novelty into a go-to interface for organizing daily life. As individuals gain confidence, they can adopt more sophisticated uses—such as controlling smart home systems, planning commutes, or handling personal finances through spoken commands. Here, the agent shifts from utility to genuine partner, learning preferences and anticipating needs rather than waiting for explicit prompts. Over time, voice interaction becomes the default gateway to digital systems, bringing convenience, accessibility, and personalization beyond anything offered by purely text-based interfaces.

By weaving Voice AI Agents into practical steps for individuals, businesses, and development teams, the “Voice First” concept ceases to be a fleeting trend. Instead, it becomes a roadmap for reshaping how we live, work, and communicate—rooted in authentic, human-like dialogue rather than mechanical commands.

Conclusion

We stand at a pivotal moment reminiscent of the “Mobile First” era—only this time, the device is our own voice. Technological leaps in speech recognition, contextual understanding, and proactive intelligence have transformed voice assistants from occasional novelties into potential life partners for our digital world.

Yet, the full power of “Voice First” isn’t merely about convenience or freeing our hands. It’s a gateway to deeper, more empathetic AI interactions across all modalities—voice, touch, visual, and beyond. By addressing challenges around privacy, user trust, and ethical design, we can ensure voice interactions evolve to feel as natural and inclusive as genuine human dialogue.

Whether you’re an individual trying to simplify daily tasks, a business aiming to innovate, or a developer ready to redefine the future, now is the time to seize the “Voice First” opportunity. Step forward, speak up, and help shape this next transformative chapter — one spoken word at a time.