Demystifying the Model Context Protocol (MCP) - and How it Complements AI Agent Frameworks

The Model Context Protocol (MCP) is an open standard for managing context in AI systems. This article demystifies MCP's core concepts, clears up misconceptions, and highlights how MCP serves as a complementary foundational layer alongside AI agent frameworks.

Introduction

The Model Context Protocol (MCP) —proposed by Anthropic— is a forward-looking standard for bridging AI applications, data sources, and tools in a unified way, proposed by Anthropic. As large language models (LLMs) transition from simple question-and-answer engines to fully-fledged agents—capable of searching the web, interacting with enterprise databases, and automating tasks—a consistent, open protocol for managing “context” becomes increasingly vital.

In a recent talk, Mahesh Murag unpacked the philosophy behind MCP and explained why it can dramatically reduce the fragmentation and ad hoc “glue code” that often plagues AI systems. Rather than reinventing the wheel for each project—deciding how to feed an LLM the right data, hooking into various APIs, or hacking together ephemeral prompts—developers can rely on MCP as a standard layer for context exchange.

A core MCP principle that stands out is: Models are only as good as the context we provide them. Traditional AI apps frequently rely on copy-paste, manual prompt engineering, or patchy integration logic that’s unique to each environment. MCP, by contrast, formalizes how prompts, resources, and external tools are presented to the model. This means an LLM-based system can autonomously decide which tool to invoke, or how to retrieve additional information, without requiring specialized code for every single integration.

Beyond the convenience factor, MCP hints at something deeper: a blueprint for next-generation AI agents that discover new capabilities on their own—pulling from a registry of available tools, abiding by security and authentication protocols, and orchestrating multi-step plans without custom one-off solutions. I believe that open, standardized communication between AI models and the rest of the world will be key to pushing agentic AI forward in a way that is transparent, maintainable, and secure.

What follows is an in-depth look at how MCP works, the problems it solves, and why it can unlock far more flexible, context-rich AI systems. If you’ve struggled with the overhead of “tool integration” or if you’re exploring advanced agentic frameworks, MCP might well be the missing puzzle piece you’ve been looking for.

Core Concepts: Tools, Resources, and Prompts

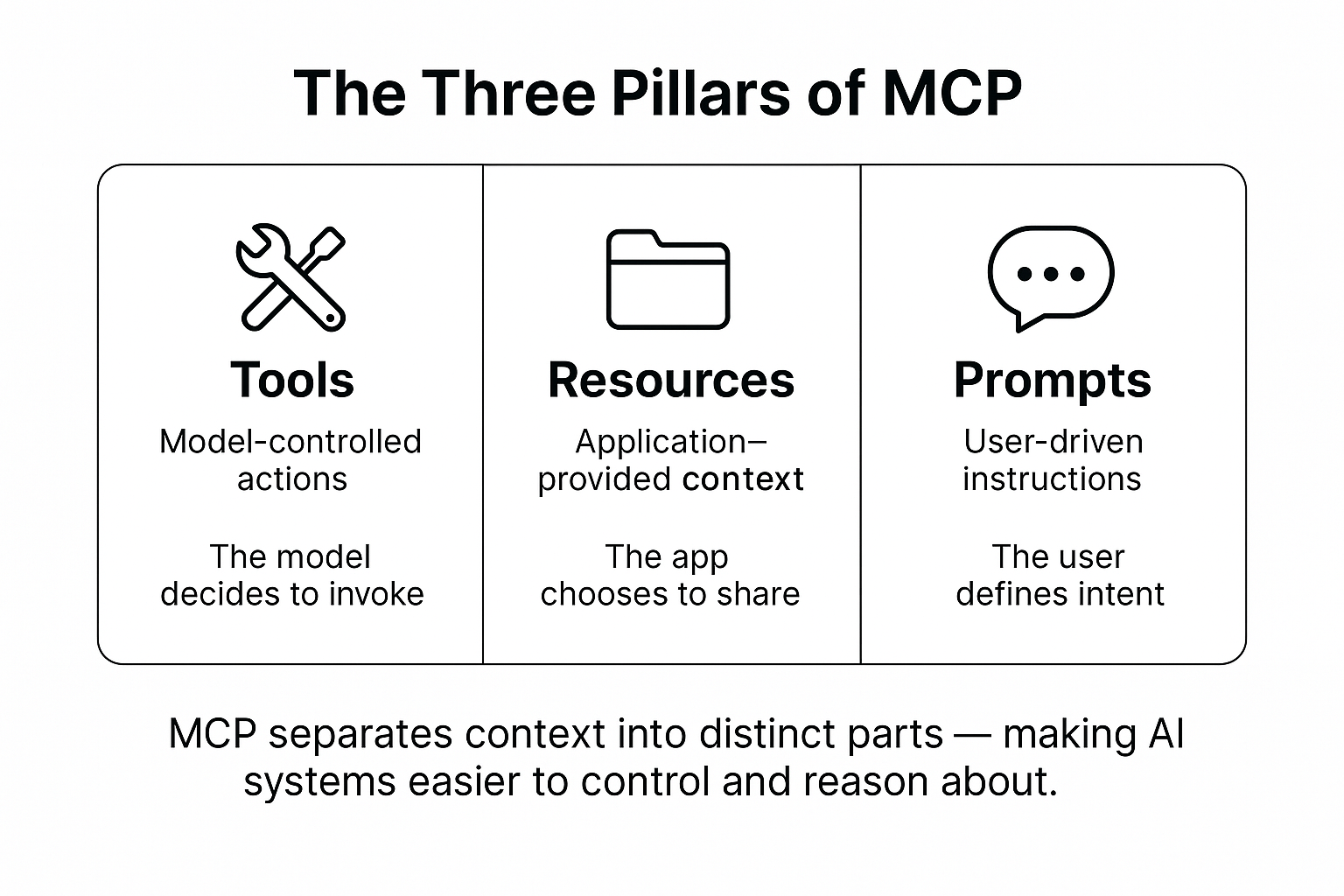

The three pillars of MCP

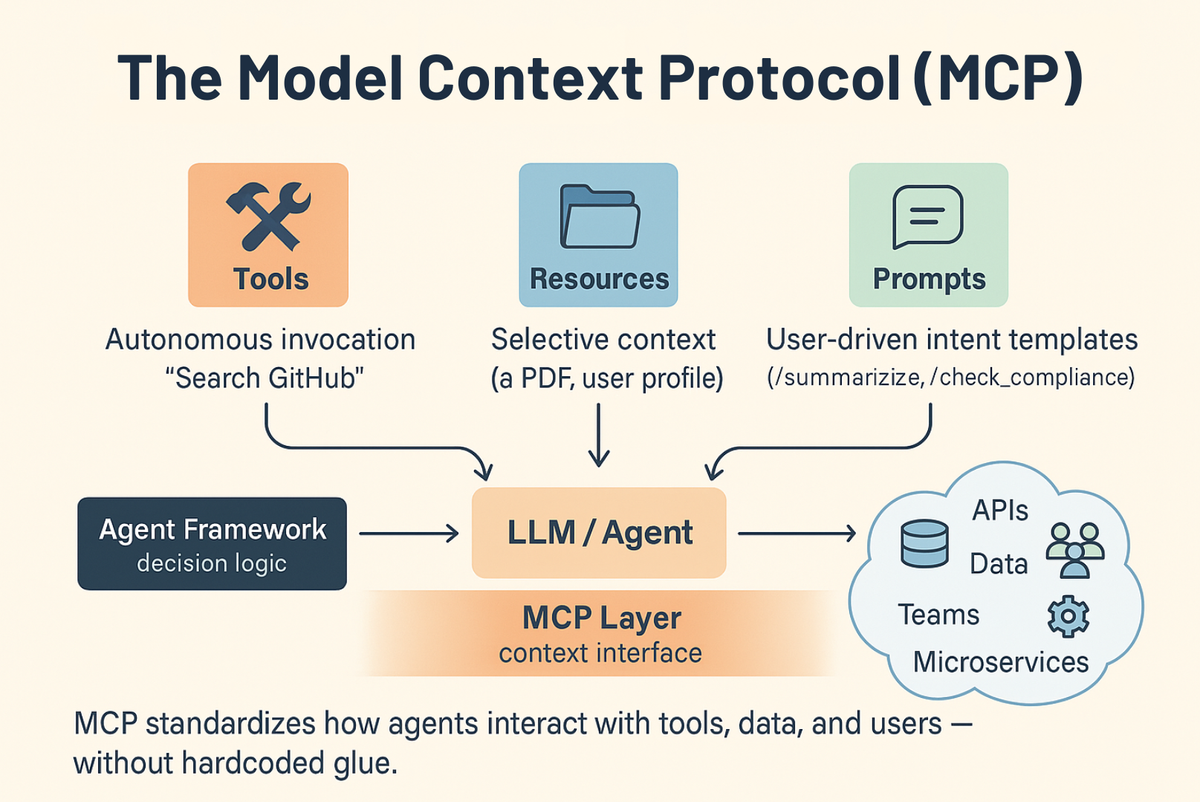

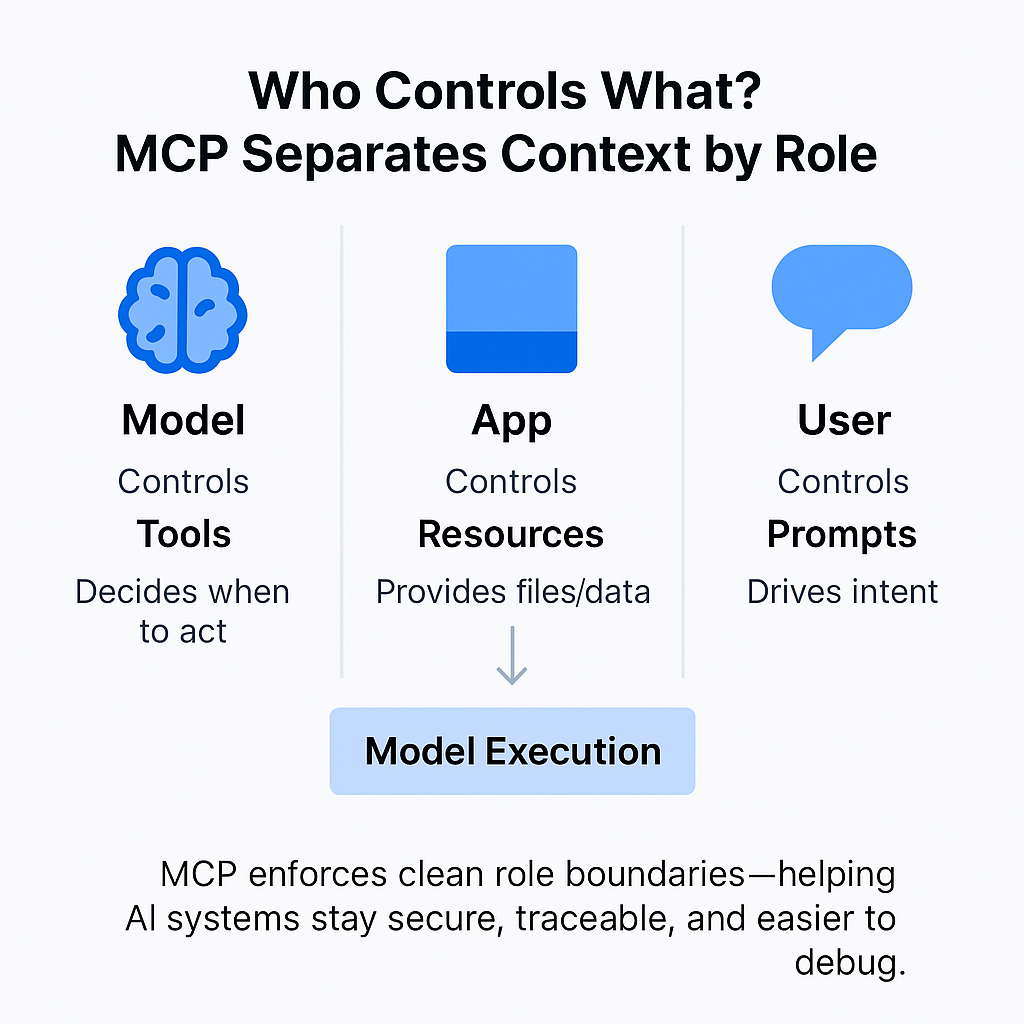

A central hallmark of the Model Context Protocol (MCP) is its structured approach to “context.” Instead of treating all context as a monolithic input to a model, MCP categorizes it under three core concepts—Tools, Resources, and Prompts—each occupying a distinct sphere of control. This design is critical for orchestrating AI systems that remain both transparent and manageable as they grow more complex.

Why Distinguish These Elements?

At a high level, “context” can mean almost anything a model depends on: user queries, internal instructions, domain knowledge, or the interfaces that let it act on external systems. In many AI workflows, however, lumping all these into a single prompt or input stream can introduce ambiguity, security concerns, or debugging challenges. MCP addresses this by segregating the data according to its source, purpose, and the entity responsible for it. Through this structure, each component of context can be governed independently, allowing developers and users to keep better track of how the AI is being guided and what actions it is permitted to take.

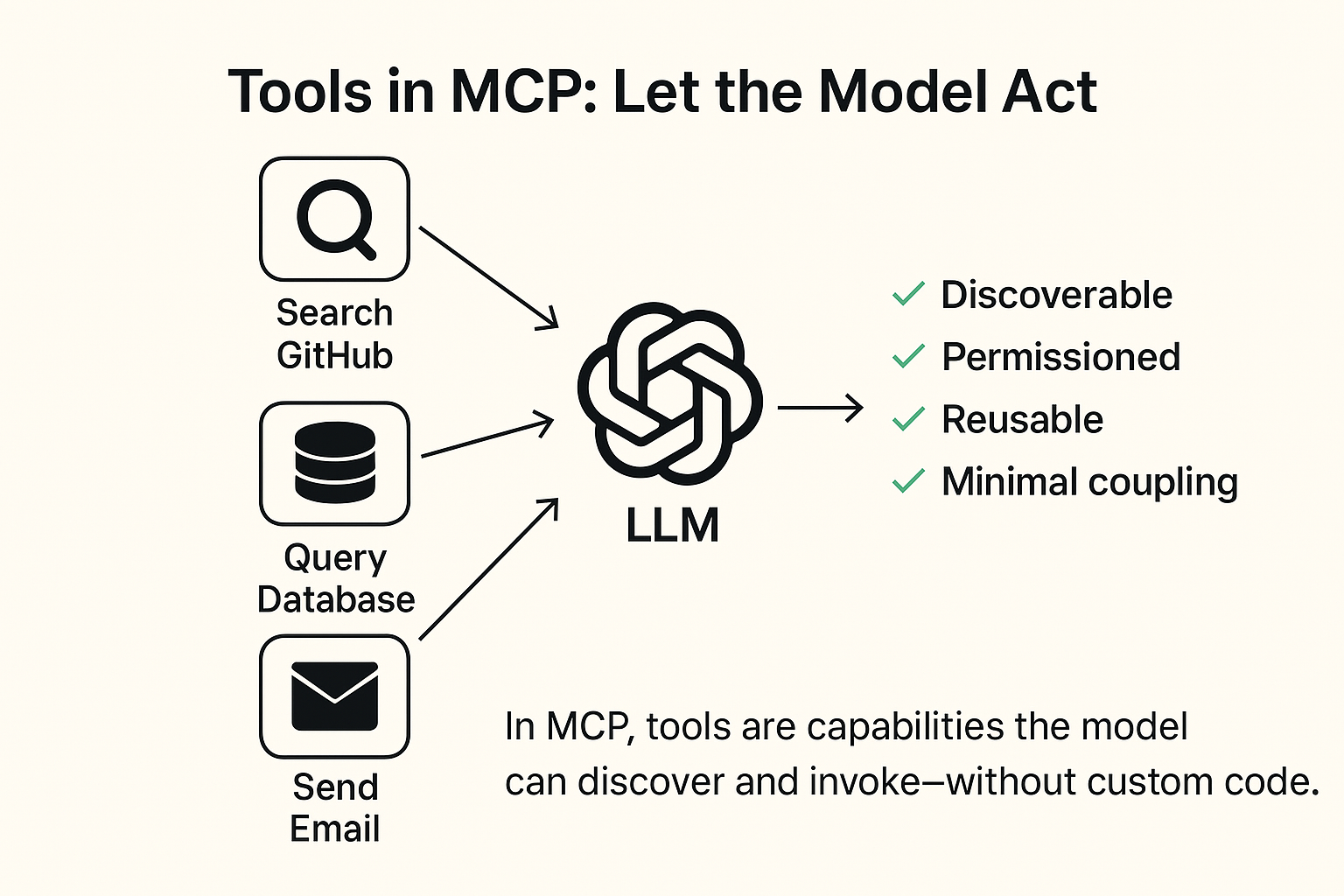

Tools: Model-Controlled Capabilities

Under MCP, a Tool is any external capability the model can choose to invoke on its own initiative. For example, an AI-powered software agent might have access to a “Search GitHub Issues” tool, a “Query Database” tool, or a “Send Email” tool. Each tool is associated with a description and an interface (sometimes an API call), letting the model know what the tool does and how to request it.

- Model Autonomy: Tools empower the AI to make decisions about when it requires more data or needs to perform a specific action, without the user having to micromanage each step.

- Enforced Permissions: Tool definitions can incorporate security constraints or usage limits—ensuring that even if the model “knows” a tool exists, it can only use it under legitimate conditions.

- Minimal Coupling: Developers don’t have to hardcode tool integrations into every agent workflow. Instead, they register tools in the MCP server, and any AI client (or agent) that speaks MCP can discover and use them when relevant.

Sphere of Control: The model decides when to invoke a tool, based on the AI’s internal reasoning or the user’s broader request.

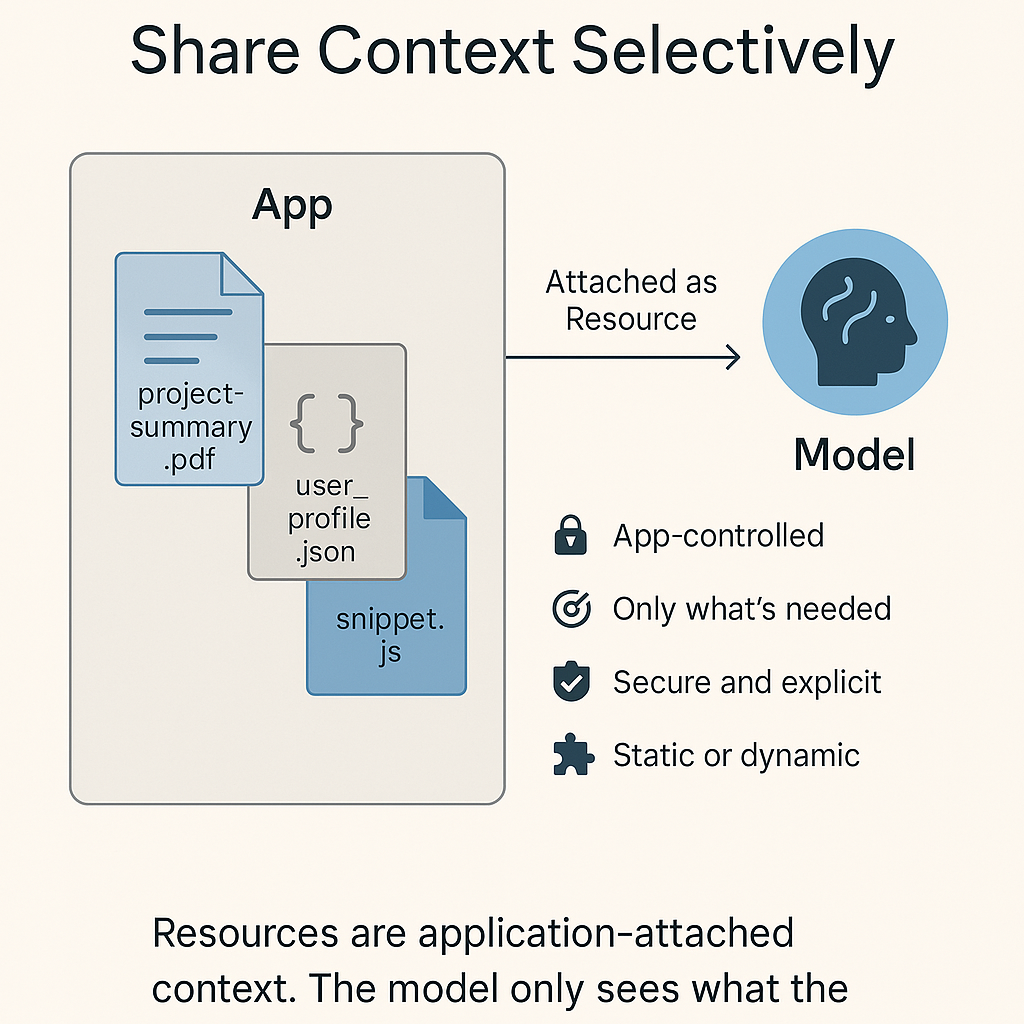

Resources: Application-Controlled Data

While tools are all about the model taking action, Resources serve as contextual data or artifacts that the application attaches. This might be a reference file, a snippet of code, or a structured JSON document. The key is that the application (or user interface) elects to provide these resources, rather than letting the model pull them arbitrarily.

- Selective Context Provisioning: In many scenarios, you may not want to overwhelm your model with irrelevant data or private content. By marking certain documents or records as Resources, the application can attach only what the model truly needs, precisely when it needs it.

- Secure Boundary: If sensitive data is stored in a protected environment, application-level controls can ensure it doesn’t get exposed to the AI unless the user explicitly chooses to share it.

- Flexible Attachment: Resources can be static (e.g., a user-uploaded PDF) or dynamically generated (e.g., a personalized summary). Either way, they maintain their identity within MCP so the model and the user both know exactly what has been shared.

Sphere of Control: The application (or the user via the application’s interface) decides what data is introduced into context as a Resource.

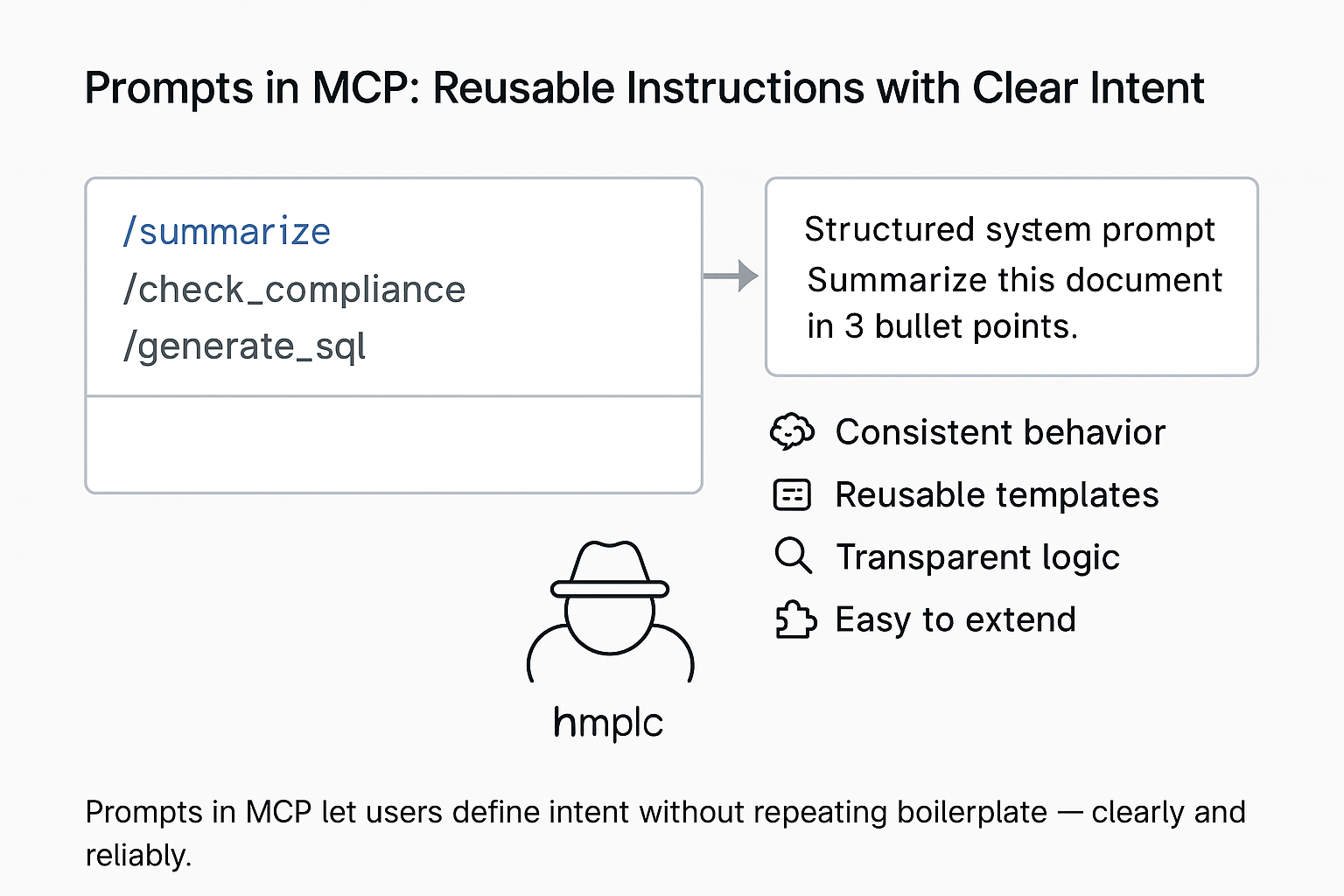

Prompts: User-Controlled Requests or Templates

The third pillar of MCP is the Prompt—a user-driven way of instructing the model or formatting a request without requiring repeated boilerplate. Imagine short “slash commands” that expand into a full-fledged system prompt. By design, prompts are explicitly tied to user intent or user-defined flows.

- Simplified Interaction: For recurring tasks like summarizing documents or generating a query, prompts let the user invoke a pre-built template. This ensures consistency in wording or formatting.

- Control and Transparency: Since prompts are user-facing, they make it clear which instructions the model is receiving and how it’s expected to behave. This is especially helpful when you want a reliable output format or a consistent style.

- Extensibility: An organization might define dozens of specialized prompts for tasks like code debugging, contract review, or analytics reporting. Each one can be published in an MCP server and triggered at will, avoiding repetitive copy-paste instructions.

Sphere of Control: The user (often via a UI or a command) triggers the prompt, shaping exactly how the model interprets or structures its next steps.

Cleaner Data Flows and Secure Role Separation

By aligning each piece of context with the party that owns or initiates it (the model, the application, or the user), MCP naturally enforces a distribution of responsibilities. Tools do not automatically get invoked without the model’s conscious decision; resources do not slip into a conversation unless the application attaches them; prompts remain clear and user-driven. This compartmentalization leads to:

- Reduced Confusion: Developers and users can quickly see which part of the workflow is responsible for an action or data point.

- Enhanced Security: Sensitive data can stay locked down as a resource until explicitly shared. Likewise, a model can only invoke tools it’s authorized to use.

- Scalable Collaboration: In larger teams or multi-agent environments, each role—be it the UI developer, the domain expert, or the model developer—knows exactly what part of the MCP architecture they must manage or configure.

Ultimately, Tools, Resources, and Prompts provide MCP with a robust framework for delivering context to AI systems in a way that is both powerful and granular. Whether you’re building a simple Q&A chatbot or orchestrating a network of specialized agents, this tiered approach helps ensure that data flows remain cohesive, purposeful, and secure.

MCP as a Foundational Layer for Agents and Where Agent Frameworks Fit In

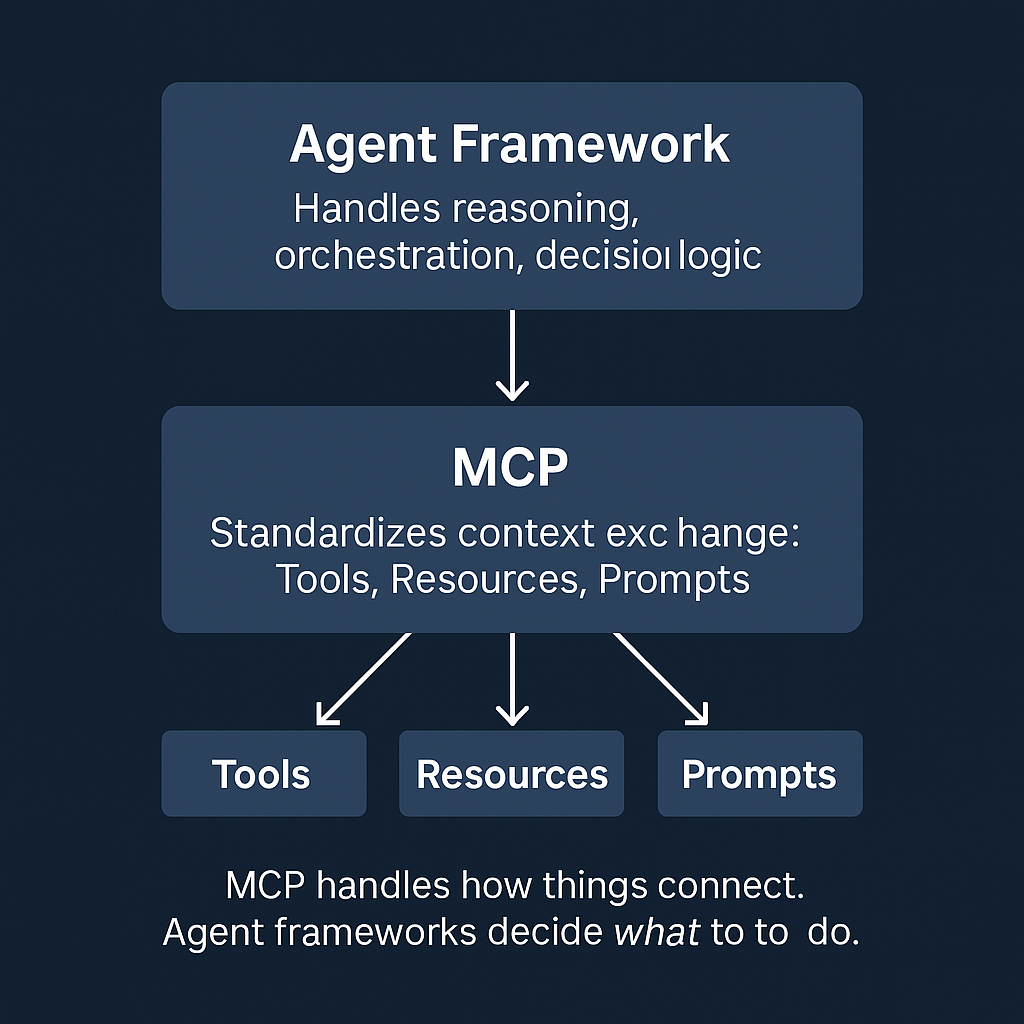

A key learning for those exploring the Model Context Protocol (MCP) is understanding how it relates to—rather than replaces—agent frameworks. At first glance, the very idea of an “open standard” for exchanging prompts, tools, and resources might seem to overlap with existing agentic solutions. After all, agent frameworks often handle sophisticated decision-making, multi-agent dialogues, and contextual prompts. But while the two can appear similar in scope, MCP and agent frameworks serve complementary roles.

Agent frameworks (which may be custom-built, or existing off-the-shelf frameworks) are mainly about logic and orchestration. They define how an AI system “thinks,” including when (and why) it calls a specific tool, how it manages chain-of-thought, or how multiple agents coordinate to complete a larger task. In other words, an agent framework governs the flow of reasoning: deciding if one agent should request data from another, choosing which part of the conversation to summarize, and controlling how the system evolves over time.

MCP, by contrast, is more like a universal “network layer” for AI. Instead of prescribing internal agent logic, MCP enforces a standardized approach to describing and exchanging context—be it a prompt template for user instructions, a chunk of data from a remote database, or the endpoint of a newly discovered API. Because each component in an MCP-based system speaks the same language for describing these interactions, multiple agents (each with its own internal logic) can interoperate without needing specialized glue code. The result is a more modular setup: you can integrate or swap out agents, data sources, or specialized tools without rebuilding the entire pipeline from scratch.

To put it another way: MCP focuses on how context travels, while an agent framework decides which context to provide, when to acquire more data, and how to interpret the results. In a multi-agent world, MCP’s standardized layer also makes it far simpler to connect agents that weren’t necessarily designed to work together. Each agent or tool simply needs to comply with MCP’s established interfaces. As soon as a new server or specialized capability (like web search or CRM integration) comes online, the orchestrating agent framework can discover and use it—no need for custom endpoints or code.

This decoupling of roles has practical benefits. By offloading the details of data exchange to MCP, agent frameworks can zero in on orchestrating the cognitive flow: delegating tasks across agents, managing conversation state, or deciding whether to verify a fact with a “checker agent.” Developers retain the freedom to implement multi-step logic or multi-agent collaboration however they see fit, confident that MCP handles the nitty-gritty of packaging and routing context.

Over time, this layered approach is likely to supercharge advanced AI systems. Agent frameworks can become more powerful because they’re free to focus on higher-level reasoning—knowing that, through MCP, they can tap into a growing ecosystem of tools and data resources using the same protocol. Meanwhile, organizations can share or re-use integrations—like a Slack or GitHub server—across many projects, without forcing each agent developer to write their own specialized code.

Rather than competing with each other, agent frameworks and MCP form a symbiotic relationship. Where agent frameworks bring the “brain” and “orchestration” of an AI system, MCP supplies the “circulatory system,” ensuring every prompt, resource, or tool call arrives in a consistent format. By recognizing this interplay, developers can design multi-agent systems that are both highly adaptable and less prone to fragmentation, ushering in a new wave of scalable, context-rich AI.

Common Misconceptions About MCP

Even though the Model Context Protocol (MCP) is designed to simplify AI development, it often raises questions among those encountering it for the first time. Below are some frequently heard misconceptions—and a look at how MCP actually operates.

Misconception #1: “MCP Replaces Agent Frameworks Entirely.”

As we discussed in the earlier section, it’s understandable how one might see MCP—designed to handle prompts, resources, and tool calls in a standardized way—and conclude that it encompasses all the logic typically found in agent frameworks. Agent frameworks, however, do much more than merely exchange data: they handle the deeper “reasoning loop,” orchestrating which tools or sub-agents to invoke and when. MCP’s role is to serve as a communication backbone, ensuring that prompts, data attachments, and tool commands are packaged consistently, so multiple agents and services can interact without ad hoc code. In other words, if an agent framework decides to dispatch a coding task to a specialized “coder agent,” MCP simply guarantees both parties speak the same language for passing relevant context. By leaning on MCP for context handling, agent frameworks become free to focus on the higher-level logic of multi-step processes and multi-agent coordination.

Misconception #2: “All Context in MCP Is Just One Big ‘Function Call.’”

At a glance, it can seem that everything an AI system needs—prompts, external tool calls, and resource attachments—funnels through a single invocation flow. That concern usually comes from confusion about how MCP actually segments different aspects of context. In practice, MCP carefully distinguishes between tools (which the model can decide to use), resources (which the application selectively attaches), and prompts (often user-driven commands or templates). Each of these elements follows its own interface and control mechanism, preventing accidental overlap or chaos in the system. Rather than juggling an unwieldy “blob” of data, MCP-based architectures keep each type of context in its rightful place, which in turn makes debugging, security checks, and user guidance simpler and more robust.

Misconception #3: “Security and Authentication Were Bolted On Later.”

Given how swiftly AI protocols evolve, it's natural to be wary of whether security considerations have kept pace. In MCP's case, security is a foundational, continuously evolving component of the protocol. Recent versions integrate features such as OAuth handshakes and granular per-server permission controls, ensuring that a client cannot simply invoke arbitrary tools without proper authorization. Servers can explicitly define which user scopes and data flows they permit, providing a gatekeeping layer at the protocol level. The MCP community is also actively planning official “registries” to verify the authenticity and version of any given MCP server. These ongoing security measures represent an important consideration for organizations implementing AI systems where data protection and system integrity are critical requirements.

Misconception #4: “Versioning and Maintenance Could Be a Mess.”

With any open ecosystem, concerns about version fragmentation are inevitable. As multiple teams publish their own MCP “servers,” a new feature or minor update can create fear of breaking existing workflows. To address this, MCP encourages explicit versioning and backward-compatible releases, allowing tools to evolve without forcing everyone to upgrade simultaneously. Planned registry support will let developers pin dependencies to a stable release or experiment with nightly builds, much like they would with Python packages or npm modules. Additionally, each server decides precisely which tools it exposes and can manage deprecation policies accordingly, making it easier to maintain reliability across diverse projects.

Misconception #5: “You Must Host Everything—and Maintain Long-Lived Connections—to Use MCP.”

Early MCP demonstrations often showcased local or in-memory servers relying on persistent Server-Sent Events (SSE). This gave the impression that adopting MCP inherently meant running multiple processes, each holding open a connection. Recent updates, however, reflect a pivot toward a more flexible, mostly-HTTP-based transport that accommodates both stateless and stateful needs. Now, an MCP server can handle single-shot requests via simple HTTP, then “upgrade” to SSE only when streaming is necessary. This approach drastically simplifies hosting: a serverless or container-based environment can spin up and respond to a request without holding onto a perpetual socket. For scenarios demanding extended context—like multi-step tasks—servers can still generate session IDs, but there’s no blanket requirement for always-on connections. In essence, this evolution ensures MCP fits smoothly into modern cloud infrastructure, sidestepping the friction of old-school long-lived SSE connections while remaining fully capable of handling real-time updates when needed.

Putting It All Together

MCP isn’t merely another AI library; it’s a common language for managing context across models, agents, and external tools. By recognizing that MCP serves as a protocol layer—not a replacement for agentic reasoning—and that its approach to security, versioning, and transport is already evolving with the broader AI ecosystem, one gains a clearer view of its potential.

Real-World Scenarios

An Enterprise Use Case

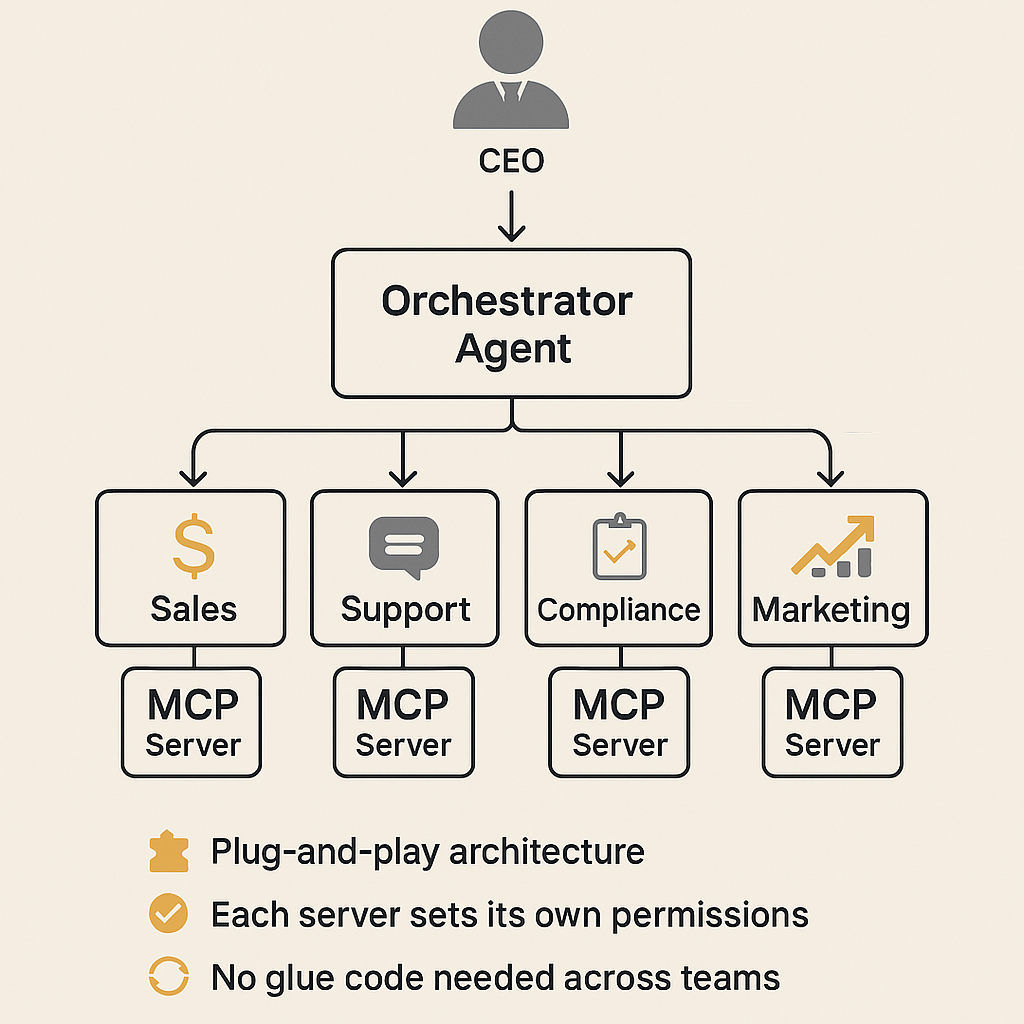

Imagine a mid-sized enterprise where multiple departments—Sales, Marketing, Support, and Compliance—each manage their own microservices, from CRMs and analytics dashboards to ticket systems and policy checkers. Under traditional approaches, uniting all these services into a shared AI workflow would involve writing endless “glue code,” creating maintenance headaches any time a tool or API changed. MCP offers a more unified path: each department simply publishes an MCP server, exposing the relevant tools and resources according to a single, open standard.

With that in place, a custom multi-agent orchestrator—designed in-house or adapted from an existing agent framework—can communicate with every MCP server seamlessly. If the CEO requests a combined view of a customer’s recent support tickets, CRM status, marketing engagement, and compliance logs, the orchestrator delegates tasks to specialized sub-agents. One sub-agent calls the Support server to fetch open ticket details. Another queries the CRM server for the customer’s sales pipeline history. A third consults the Marketing server to gather campaign engagement metrics, and a fourth checks the Compliance server to ensure data policies aren’t being breached.

The real advantage emerges as these departments grow or new ones appear. Each team can spin up its own MCP server, giving the orchestrator (and any sub-agents) a standardized interface for discovering and using the new tools. There’s no need to refactor the entire codebase just to accommodate an additional microservice. Security and versioning stay consistent too: each department sets its own permissions and updates, and the orchestrator simply relies on MCP’s existing security hooks and version-management guidelines. As a result, the enterprise gains a scalable, plug-and-play ecosystem, where collaborative intelligence can flourish without tangling itself in countless one-off integrations.

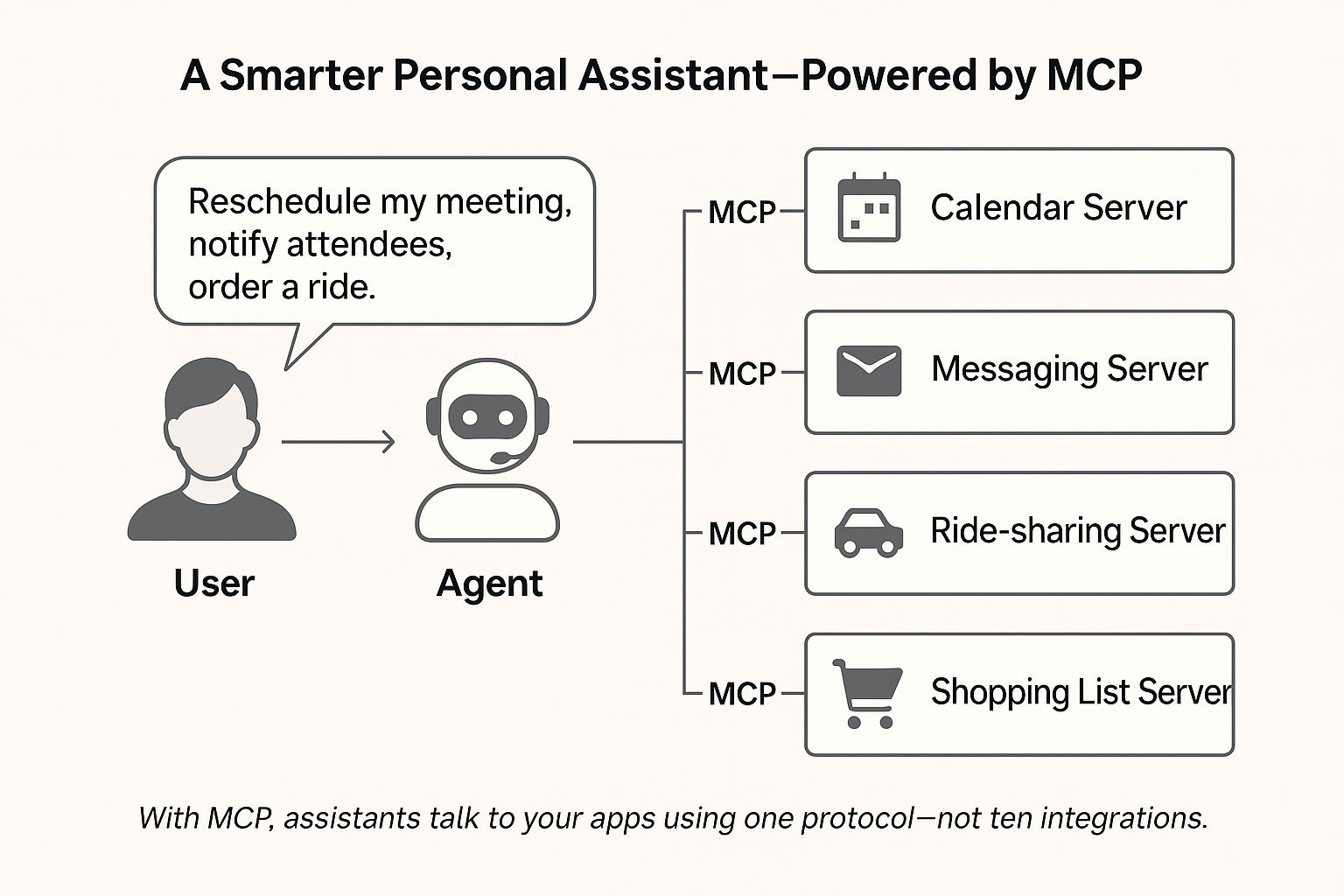

A Consumer-Centric Example: Personal AI Assistant

Consider an everyday consumer who wants to streamline daily tasks using a personal AI assistant. This user juggles multiple services: a calendar app, a shopping list tool, ride-sharing services, and a music-streaming library. Ordinarily, stitching these together requires app-specific integrations, often leaving the user stuck swapping between disconnected tools or text prompts (“Check my calendar, then book me a car at the right time, and add bread and milk to my shopping list”). With the Model Context Protocol, each service can act as an MCP server, providing well-defined “tools” (such as “get upcoming events,” “request a ride,” or “add grocery items”).

The user’s AI assistant—whether it’s a custom project or part of an off-the-shelf platform—then speaks MCP to connect with these diverse apps. For example, if the user says, “I’m going to be late to my afternoon meeting—reschedule it, notify the attendees, and order me a ride,” the assistant can consult the calendar server to find the relevant meeting, use the messaging server to send an update, and finally call the ride-sharing server to arrange pickup, all through the same, standardized protocol. No separate integration code is required for each service; the assistant automatically knows how to invoke each tool once it has discovered that server.

This setup becomes even more powerful as the consumer’s needs grow. Perhaps they install a fitness server that tracks workout progress or a smart-home server that controls lights and thermostats. As soon as these services are published under MCP, the AI assistant can discover and integrate their tools, enabling interactions like, “Turn off the living room lights when I leave for my gym class, and update my workout logs accordingly.” In this way, MCP helps ordinary users avoid juggling specialized APIs or switching between dozens of apps—giving them a truly unified personal assistant that still respects each service’s security and data boundaries.

Reflections and Conclusion

Throughout our exploration of the Model Context Protocol (MCP), one central theme emerges: standardizing how context flows dramatically reduces the complexity of AI development. Rather than repeatedly hacking together one-off integrations for each new tool or data source, MCP sets a unified pattern for describing prompts, data attachments, and tool calls.

This design also encourages better governance and clearer boundaries. Each “player” in the system—be it a model, a user, or an application—knows exactly what it can access and how it can use that access. The end result is more transparent development, easier auditing, and smoother collaboration.

While MCP is still in its early stages, its adaptability is part of the appeal. The protocol is actively evolving through community input, and there are still open questions—around tooling, security, and deployment patterns—that will shape how it matures. What’s promising is not that MCP is fully resolved, but that it is heading in the right direction.

Future enhancements—like an MCP registry that functions as an “app store” for public servers—could make discovery and versioning even more seamless. Meanwhile, as multi-agent systems grow more sophisticated, MCP is well-positioned to ensure context remains both consistent and secure, provided it continues evolving through community-driven insights and feedback.

Ultimately, MCP doesn’t replace agentic logic or security frameworks—it complements them. By providing a common language for context exchange, MCP empowers developers to build AI systems that are easier to maintain, more transparent, and ready to scale. As AI permeates our daily routines, this blend of clarity and adaptability may prove indispensable for creating agentic systems that can keep pace with continuous change.