Can Large Language Models (LLMs) Reason and Plan? Exploring the LLM-Modulo Framework

While LLMs excel at generating quick, intuitive responses, they generally struggle with complex, logical planning tasks. The LLM-Modulo Framework addresses this by integrating LLM-generated ideas with external, model-based verifiers, enhancing planning accuracy and reliability.

Introduction

A recent Bloomberg report unveils that OpenAI has defined five stages of Artificial Intelligence as follows:

OpenAI considers us currently on Level 1 and inching closer to reaching Level 2. The key feature of Level 2 is reasoning and planning capabilities. Furthermore, these capabilities are foundational for enabling autonomous AI agents. As AI agents become more prevalent, their ability to reason and plan effectively will fundamentally change how we live and work.

This post explores one of the well-known papers in this domain by Kambhampati et al., titled: LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks.

Understand Reasoning and Planning

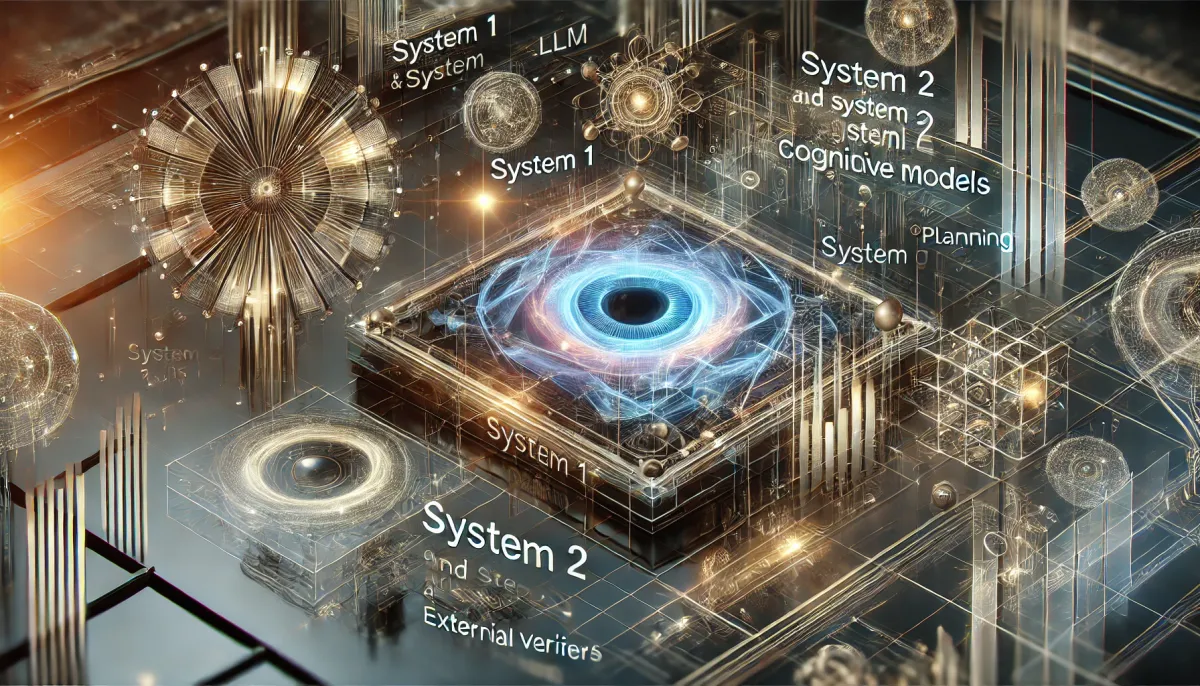

In the paper, the authors highlight that current LLMs, which generate tokens in constant time, may not be capable of principled reasoning on their own. To understand this, it's useful to consider the cognitive model of System 1 and System 2. System 1 is characterized by fast, automatic, and intuitive thinking—quick responses that occur without conscious thought. On the other hand, System 2 involves slow, deliberate, and logical reasoning, requiring conscious effort and used for complex decision-making. It is suggested that LLMs operate similarly to a large-scale System 1, handling tasks rapidly and intuitively but potentially lacking the depth of reasoning and reflection associated with System 2.

Source: LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks

Current Limitations of LLMs in Planning

For effective planning, an entity must possess the necessary domain knowledge and be able to craft an executable plan. The paper reveals that the autonomous planning capabilities of LLMs are quite limited. In autonomous scenarios, for example, on average only about 12% of the plans generated by a top-performing LLM (GPT-4) are executable without errors and can achieve their intended goals. This suggests that LLMs are more likely retrieving approximate plans rather than genuinely planning, which highlights a significant gap in their ability to perform complex reasoning and planning tasks independently. Additionally, the authors challenge well-known claims in the literature about LLMs' planning capabilities, such as their ability to self-verify.

The LLM-Modulo Framework

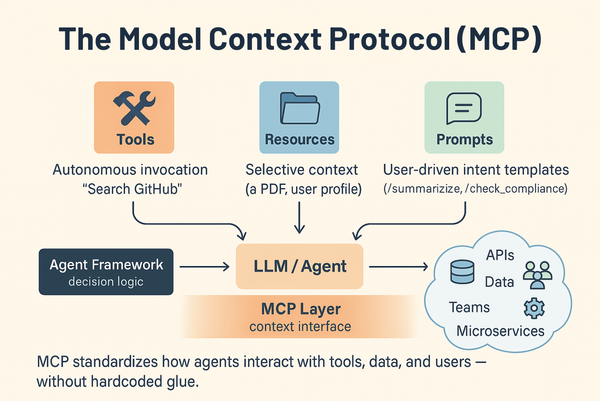

In light of these limitations, the paper introduces the LLM-Modulo Framework.

Source: LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks

The key idea here is to harness the strengths of LLMs while mitigating their weaknesses by combining them with external model-based verifiers in a bi-directional interaction. Rather than simply pipelining LLMs and symbolic components, this approach offers a more integrated neuro-symbolic method. LLMs can generate approximate ideas related not only to plan candidates but also to domain models, problem reduction strategies, and refinements to problem specifications. This multi-faceted role allows LLMs to contribute significantly to the planning process when their outputs are refined and validated by external critics.

The effectiveness of the LLM-Modulo Framework is demonstrated through case studies in classical planning and travel planning, as illustrated below:

Source: LLMs Can't Plan, But Can Help Planning in LLM-Modulo Frameworks

These studies show that the framework can significantly improve planning accuracy by generating high-quality candidate plans. Once these plans are refined and validated by external verifiers, they become robust and executable final outputs. Ensuring that final plans meet model specifications is crucial for their practical applicability.

Summary

As AI continues to evolve and integrate into various aspects of our lives, understanding and improving reasoning and planning capabilities will be critical for developing effective autonomous AI agents. While current LLMs alone may struggle with autonomous reasoning and planning, frameworks like LLM-Modulo present promising approaches to leveraging their strengths. By integrating LLMs with external verifiers, it is possible to enhance planning accuracy and reliability. This article explores one of the well-known papers in this domain, reflecting the ongoing research and debates that aim to unlock the full potential of AI.